Building a Local AI Voice System (When the Hard Part Isn’t AI)

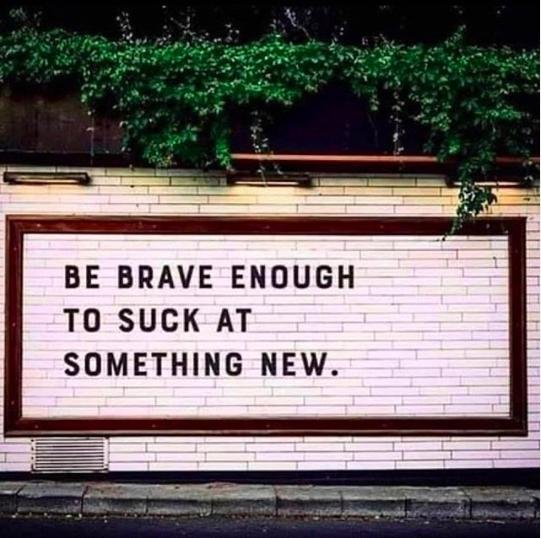

Yesterday was one of those days where progress didn’t look like progress.

On paper, the goal was simple: refine a local voice-cloning pipeline so I can turn finished text into audio using my own voice, entirely on my laptop, without cloud services, subscriptions, or dashboards.

In reality, it turned into a long, winding encounter with the friction that lives between tools, not inside them.

This is the part of building software people rarely talk about. Not the big idea. Not the demo. The part where everything technically works, but nothing quite behaves.

The idea was never “AI for AI’s sake.”

I didn’t wake up wanting to clone my voice because it’s novel.

I wanted a way to think out loud, edit deliberately, and then re-embody that writing as audio without rerecording everything.

Same philosophy as my transcription system: keep cognition flowing, remove mechanical friction, and keep judgment in human hands.

So the constraints were clear:

- Everything runs locally

- No data leaves my machine unless I choose it

- No subscriptions

- No dashboards

- Drop files in folders, get results out

That part is solid. The system exists.

But yesterday wasn’t about architecture. It was about tuning.

When “close” is the most frustrating distance

I had a working voice clone. It spoke. It sounded human.

It even sounded vaguely like me. And that was the problem.

It was almost right.

The pitch was too high. Or too constant. The cadence too fast. The pauses unnatural. It sounded like me if I were permanently mid-sentence, slightly caffeinated, and voicing a cartoon I didn’t agree to.

This is where the work stopped being about AI and started being about listening.

You can’t debug voice by reading logs. You have to hear it.

Over and over. Tiny changes. One parameter at a time. Change speed. Listen. Change pitch. Listen. Add breathing pauses. Listen again.

This is typography, not programming.

You don’t “optimize” a voice. You kern it.

Toolchains don’t fail—seams do

Nothing actually broke yesterday.

Whisper worked.

XTTS worked.

FFmpeg worked.

Homebrew worked.

Python worked.

What failed was expectation alignment between tools.

- Python versions that were technically compatible but practically hostile

- Libraries emitting warnings that weren’t errors; noise pollution

- Audio files that were “valid” but not ideal

- Defaults that made sense for demos, not humans

At one point I spent an unreasonable amount of time just trying to measure pitch properly because every shortcut tool failed quietly.

Only when I dropped down to Praat did the truth become visible: my average pitch sat right where I expected.

The voice model wasn’t “wrong”—it was overconstrained.

That realization matters. It means the fix isn’t “more data” or “better models.” It’s restraint.

The emotional tax of almost-automation

This is the part that doesn’t show up in README files.

Every detour costs attention.

Every rabbit hole costs energy.

When you’re doing this work alone, late, after already solving the hard problems, it’s easy to lose your way and start questioning decisions that were correct.

At one point I caught myself thinking: Why didn’t I just use a service?

And then immediately remembered why.

Because services hide tradeoffs. They optimize for scale, not thoughtfulness. They turn authors into operators. They’re not private.

What yesterday actually produced

Even if it didn’t feel like it, yesterday locked in something important:

- A clean voice anchor with the right pitch range

- A stable speed setting that feels human

- Real breathing and pause handling

- A repeatable way to evaluate changes instead of guessing

- A clear line between “voice quality” work and “automation” work

Most importantly, it clarified what not to do next.

- No more parameter thrashing.

- No more chasing novelty.

- No more piling automation on an unstable core.

That’s the same lesson I learned building the transcription pipeline.

- Stability first.

- Then convenience.

- Then publishing.

Why this matters beyond this project

This isn’t really about voice cloning.

It’s about building tools that respect the person using them. Tools that don’t rush. Tools that don’t decide for you. Tools that let you stay in the work instead of managing the work.

Yesterday was a reminder that the hardest part of building these systems isn’t AI. It’s patience. It’s taste. It’s knowing when to stop turning knobs and start listening.

I went to bed knowing the voice is close enough to keep going—and that’s the right place to pause.